The dialectic spirals onward. At this stage of the game, we should be thinking about some kind of composite "made" of polynomials, which should provide perspective for polynomials, and which can be viewed as a yet higher order generalization of the concept of "number". What we're looking for is a "matrix" aka a "linear transformation" aka an "operator"--even: an "observable". And we'll find hiding within a whole theory of differential equations.

We've already seen matrices and the vector spaces they act upon, only in disguise. Whenever we work with (x, y) or (x, y, z) coordinates, we're already using the idea of a vector space. Integration and differentiation turn out to be linear operators. Mobius transformations can be represented as 2x2 complex matrices with unit determinant. (Plainly, they were linear transformations: they didn't involve powers of $z$ greater than 1.) And matrices/linear transformations in general can furnish representations of symmetry groups, able to do justice to the law of group multiplication which is not necessarily commutative, so that $AB \neq BA$. For example, the order in which you do rotations of a sphere matter.

The unifying concept is that of a vector space. A vector space consists of multidimensional arrows called "vectors" and "scalars," the latter of which are just normal numbers. You can multiply vectors by scalars, and add vectors together, and you'll always get a vector in the vector space. The vector space could have a finite number of dimensions, or even an infinite number.

Indeed, it turns out that polynomials form a vector space. Think of the monomials, the powers of $z$, as being basis vectors! What do I mean?

Implicitly, we've worked with real vector spaces any time we've used $(x, y)$ and $(x, y, z)$ coordinates, projective or otherwise. For polynomials, we're working with a complex vector space: after all, we allow our polynomials to have complex coefficients. (Note we could use other "division algebras" for our scalars: reals, complex numbers, quaternions, or octonions, but complex numbers give us all we need.)

The point is that, real or complex, we can expand a vector in some "basis," in some reference frame, in terms of certain chosen directions. In fact, a vector (as a list of components) is meaningless without reference to which directions each component refers to.

For real 3D vectors, we often use $(1,0,0)$, $(0,1,0)$, and $(0,0,1)$ implicitly as basis vectors. Any 3D point can be written as a linear combination of these three vectors, which are orthonormal: they are at right angles, and of unit length.

Eg. $(x, y, z) = x(1,0,0) + y(0,1,0) + z(0,0,1)$.

But any set of linearly independent vectors can form a basis, although we'll stick to using orthonormal vectors. Linear independence means that each vector can't be written as a linear combination of the other vectors. (A quick way to check: if you stick the vectors together as the columns of a matrix, then this is as much to say: its determinant is not 0.)

In terms of polynomials, the powers in the variable act like basis vectors. If we add two polynomials, the coefficients of $z$ are added to the coefficients of $z$ separately from the coefficients of $z^{2}$, etc.

For real vector spaces, the length of a vector is given by $\sqrt{v \cdot v}$, where $v \cdot v$ is the inner product. In the finite dimensional case, just multiply the entries of the two vectors pairwise and sum. This is just the Pythagorean theorem in disguise. Given $\begin{pmatrix}x \\ y\end{pmatrix}$, its length is $\sqrt{xx + yy}$. This works for any number of dimensions.

You can also take the inner product of two different vectors. $\begin{pmatrix}a & b\end{pmatrix} \begin{pmatrix}c \\ d\end{pmatrix} = ac + bd$. Here the idea is that there are "row vectors" and "column vectors," the row vectors being in the "dual vector space" (this is more important when you work with non-linearly inpendent basis vectors.) The upshot is that juxtaposing a row and a column means: take the inner product. This quantity, also denoted $\vec{u} \cdot \vec{v}$, will be 0 if the vectors are at right angles. It can also be expressed $\vec{u} \cdot \vec{v} = |\vec{u}||\vec{v}|cos(\theta)$, where $|u|$ and $|v|$ are the lengths of the vectors in question, and $\theta$ is the angle between them. From this one can calculate the angle: $\theta = cos^{-1} \frac{\vec{u} \cdot \vec{v}}{|\vec{u}||\vec{v}|}$.

In other words, an inner product gives us a way of defining the lengths and angles of vectors. And there are many different inner products, as we'll see. For example, distance squared in Minkowski space is given by $t^{2} - x^{2} - y^{2} - z^{2}$: the first component (time) has a different sign that the last three (space). We could implement this inner product like this:

$\begin{pmatrix} t_{0} & x_{0} & y_{0} & z_{0} \end{pmatrix} \begin{pmatrix} 1 & 0 & 0 & 0 \\ 0 & -1 & 0 & 0 \\ 0 & 0 & -1 & 0 \\ 0 & 0 & 0 & -1 \end{pmatrix} \begin{pmatrix} t_{1} \\ x_{1} \\ y_{1} \\ z_{1} \end{pmatrix} = t_{0}t_{1} - x_{0}x_{1} - y_{0}y_{1} - z_{0}z_{1} $.

The matrix in the middle is known as the "metric."

We can expand a vector in a given basis by taking the inner product of the vector with each of the basis vectors: $ \mid v_{b} \rangle = \sum_{i} \langle v \mid b_{i} \rangle \mid b_{i} \rangle$. These will be the components of the vector in the new basis.

We could also organize the basis vectors into the columns of a matrix, so that applying the matrix to the vector $M\vec{v} = \tilde{\vec{v}}$ leads to $\tilde{\vec{v}}$ the representation of the same vector in the $M$ basis.

We can see that matrix multiplication is just a generalization of the inner product: it's in fact the inner product of the column vector which each of the rows in turn (or of a row vector with each column vector in turn). We can switch back by multiplying by the inverse of $M$: $M^{-1}\tilde{\vec{v}} = \vec{v}$.

Indeed, $M^{-1}M = I$, the identity matrix. Multiplying matrices $AB$ is just the inner product rule iterated again. The $ M_{i,j}$ component of a matrix, or grid of numbers, is the inner product of the $i$th row of A with the $j$th column of B. And the identity matrix, all zeros with 1's along the diagonal, has the special property that $IA = AI = A$.

The multiplication rule for matrices is noncommutative, but associative--often matrices have inverses--and there's an identity element. It makes sense then that we can often represent symmetry groups (which follow these same axioms) using collections of matrices, encoding group multiplication as matrix multiplication.

Matrix multiplication might preserve some property of the vector. For example, orthogonal real matrices (having orthogonal unit vectors as rows and columns) have the property that $O^{T}O = I$, so that $O^{-1} = O^{T}$, the transpose of O, with rows and columns swapped. These matrices will preserve the length of the vectors they act upon, and they form a group: in this way, they can represent, for example, rotations in any number of dimensions. The generalization of these to complex matrices are the unitary matrices which preserve the length of complex vectors, and for which $U^{\dagger}U = I$, or $U^{-1} = U^{\dagger}$, the conjugate transpose.

Linear transformations allow us to represent shifts in perspective: for example, a scene seen from a rotated or translated point of view. Such shifts in perspective can be defined in terms of what they leave invariant, in other words, what they leave the same.

For example, consider rotations around the Z axis. They leave two points fixed: $(0,0,1)$ and $(0,0,-1)$; we just rotate around the axis they form by a certain angle.

We define the eigenvectors and eigenvalues of a matrix like so:

$M\vec{v} = m\vec{v}$

In other words, on an eigenvector $\vec{v}$, the act of matrix multiplication reduces down to familiar scalar multiplication by the eigenvalue associated to the eigenvector $m$. In other words, $M$ doesn't rotate $\vec{v}$ at all: it just stretches or shrinks (or complex rotates it) it by $m$.

A matrix can be defined in terms of its eigenvalues and eigenvectors, the special directions that it leaves fixed, except for some dilation or complex rotation along that axis. Not all matrices have a complete set of eigenvectors, and sometimes there are different eigenvectors with the same eigenvalue. One interesting fact is that Hermitian matrices, complex matrices for which $H^{\dagger} = H$, have all real eigenvalues and have orthogonal vectors as eigenvectors, and so these eigenvectors form a complete basis, or set of directions, for the vector space in question.

Expressed in its "own" basis, in the basis formed by its eigenvectors, any matrix can be written as a diagonal matrix, and along the diagonal are: the eigenvalues. An n x n "defective matrix" has less than n linearly independent eigenvectors. But if a matrix does have n linearly independent eigenvectors, it can be diagonalized, and its eigenvectors form a basis/coordinate frame for the vector space. A defective matrix can, however, be brought into block diagonal form, which is the next best thing.

Just like a polynomial is "composed of" its complex roots, we could view a matrices as being "composed of" polynomials. And just as polynomials give context to their roots, a matrix gives context to polynomials. In fact, in some sense, a matrix is a polynomial, but possibly distorted in perspective. Here's a nice way of seeing that.

Consider how we find the eigenvectors of a matrix. We'll stick to the finite dimensional 2x2 case.

If we have, $A = \begin{pmatrix} a & b \\ c & d \end{pmatrix}$, we want:

$ A\vec{v} = \lambda\vec{v}$

$ A\vec{v} - \lambda\vec{v} = 0$

$ (A - \lambda I)\vec{v} = 0$

So that:

$\begin{pmatrix} a - \lambda & b \\ c & d - \lambda \end{pmatrix} \begin{pmatrix} v_{0} \\ v_{1} \end{pmatrix} = \begin{pmatrix} 0 \\ 0 \end{pmatrix}$

Therefore, $\begin{pmatrix} a - \lambda & b \\ c & d - \lambda \end{pmatrix}$ shouldn't be invertible: since it has to send $\vec{v}$ to the 0 vector.

A matrix is not invertible if its determinant is 0. So we want:

$\begin{vmatrix} a - \lambda & b \\ c & d - \lambda \end{vmatrix} = 0$

So that: $ (a - \lambda)(d - \lambda) - (b)(c) = 0 $, or:

$ ad - a\lambda -d\lambda + \lambda^{2} - bc = 0$, or:

$ \lambda^{2} - (a + d)\lambda + (ad - bc) = 0$

So the eigenvalues $\lambda_{i}$ are the roots of this polynomial, called the characteristic polynomial.

We can express our matrix in another basis with a change-of-basis transformation:

$A^{\prime} = U^{-1}A U$

We'll find that $A^{\prime}$ has exactly the same eigenvalues. They are invariant under basis transformations. Since the trace is the sum of the eigenvalues, and the determinant is the product of the eigenvalues, they too are left the same. And as mentioned above, if the matrix has a complete set of eigenvectors, than we can always bring it to diagonal form, a matrix of all 0's, with the eigenvalues along the diagonal. $ D = S^{-1}A S$, where $S$'s columns are the eigenvectors.

And so, we can think about the matrix as secretly just being a polynomial, which defines some roots, which are the eigenvalues. But the matrix representation keeps track of the fact that we may be viewing the polynomial in a different basis.

One interesting fact is that the matrix itself is a root of its characteristic polynomial, reinterpreted in terms of matrices. If $f(z) = az^2 + bz + c$ is the characteristic polynomial of a matrix $A$, such that for its eigenvalues $\lambda_{i}$, $f(\lambda_{i}) = 0$, then also $f(A) = aAA + bA + cI = 0$, where the $0$ is the zero matrix.

Another interesting fact is that in quantum mechanics, two observables are "simultaneously measurable" if their Hermitian matrices can be simultaneously diagonalized.

Matrices can be used to solve systems of linear equations.

For example, if we had:

$ax + by + cz = k$

$dx + ey + fz = l$

$gx + hy + iz = m$

We could solve it as a matrix equation:

$\begin{pmatrix} a & b & c \\ d & e & f \\ g & h & i \end{pmatrix}\begin{pmatrix} x \\ y \\ z \end{pmatrix} = \begin{pmatrix} k \\ l \\ m \end{pmatrix} \rightarrow A\vec{x} = \vec{y}$.

We're lucky if A is invertible: Then we can just do: $\vec{x} = A^{-1}\vec{y}$.

Many interesting properties of a matrix become evident when you consider the homogenous case, where $A\vec{x} = \vec{0}$. If this equation is true, it means that $\vec{x}$ is in the "null space" of A, which is a vector space of its own: since you can add two of them together, and get another such vector: $A(\vec{a} + \vec{b}) = 0$, since $A\vec{a} = 0$ and $A\vec{b} = 0$.

One can also investigate the "row" and "column space". The column space is the vector space of all possible outputs of the matrix as a linear transformation. According to the Rank-nullity theorem, the dimension of the nullspace plus the dimension of the column space (the "rank" which is the same as the dimensionality of the row space) = the dimension of the whole vector space. So in a sense, we can define a matrix by what vectors it annihilates.

When one works with differential equations, problems where one is given an algebraic relationship between the derivatives of a function and asked to find a set of equations that satisfy those relationships, for example, wave equations, classical or quantum, one ultimately sets up something like:

$ \hat{H}\phi = 0 $

Even if one starts off with some $\hat{H}\phi = whatever$, one always recasts things to get a homogenous problem, since solutions to such equations form a vector space: and you can solve the inhomogenous equations by adding a particular inhomogenous solution to your sum of homogenous solutions, and so parameterize any solution to the inhomogenous problem. Here's an intuition: when solving the differential equations governing a vibrating 1D string fixed at its ends, to homogenize the problem is as much to consider the string in its equilibrium position as a "reference frame" and describe the string's behavior as deviations from that equilibrium, rather than in terms of the $x$ and $y$ axes.

A bit more:

$\hat{H}$ is a differential operator: some expression involving the variables and the operator $\hat{D}$, which takes a derivative. In a simple, single variable case:

E.g, $y'' + y' - 2y = 0 \rightarrow (\hat{D}^{2} + \hat{D} - 2)y = 0$

Let's consider the polynomial $d^{2} + d - 2 = (d-1)(d+2) = 0$. It has roots at $\{1, -2\}$. The above equation is true if you plug in $\hat{D}$ and $d_{i}$. So we can think about this as the characteristic polynomial of some linear transformation with this matrix:

$ \begin{pmatrix} 1 & 0 \\ 0 & -2 \end{pmatrix}$

If $\lambda$ is a root of the polynomial, then $e^{\lambda x}$ is a solution to the differential equation.

$\frac{d^{2} e^{\lambda x}}{dx^{2}} + \frac{de^{\lambda x}}{dx} - 2e^{\lambda x} = \lambda^{2}e^{\lambda x} + \lambda e^{\lambda x} -2e^{\lambda x} = \lambda^{2} + \lambda - 2 = 0$

So we have two roots and two equations: $e^{x}$ and $e^{-2x}$, and therefore a general solution is a linear combination of them $y = ae^{x} + be^{-2x}$. The solution space forms a two dimensional vector space, and you can freely choose $a$ and $b$ and what you get will satisfy the different equation. If you wanted, you could keep track of that $a$'s and $b$'s separately:

$\frac{dy(x)}{dx} = \hat{H}y(x) \rightarrow y(x) = e^{\hat{H}x}y_0$

Where you can imagine that $y_{0}$ is just the coefficients $a$ and $b$ in a vector.

Indeed: you can see that$ \begin{pmatrix} 1 & 0 \\ 0 & -2 \end{pmatrix}$ acts like taking the derivative of some $\begin{pmatrix} e^{x} \\ e^{-2x} \end{pmatrix}$.

And now a word on complex vector spaces.

For complex vector spaces, we'll use the bracket notation $\langle u \mid v \rangle$, where recall that the $\langle u \mid$ is a bra, which is the complex conjugated row vector corresponding to the column vector $\mid v \rangle$. So the inner product for complex vectors is a complex number, and just as for real vectors, if the vectors are orthogonal, the inner product is 0. We can use the inner product to expand a vector given a set of basis vectors.

Complex vector spaces are a little unfamiliar. Here's some intuition. First of all, notice that when we add two complex vectors, the individual components can cancel out in a whole circle's worth of directions, not just in terms of positive and negative, as real vectors do. This will leads in the end to so-called "quantum intererence."

But here's a little more intuition.

Consider the simplest possible example: a one dimensional complex vector space, which may not even qualify, but let's see. It's just a single complex number: $\alpha = x + iy$. The length of a "vector" is just $\sqrt{\alpha^{*}\alpha} = \sqrt{x^{2} + y^{2}}$. Let's look at the inner product: $\langle b \mid a \rangle = b^{*}a$.

$b^{*}a = (b_{R} - ib_{I})(a_{R} + ia_{I})$

$\rightarrow b_{R}a_{R} + ib_{R}a_{I} - ib_{I}a_{R} + b_{I}a_{I}$

$\rightarrow (b_{R}a_{R} + b_{I}a_{I}) + i(b_{R}a_{I} - b_{I}a_{R})$.

Define $\vec{a} = \begin{pmatrix} a_{R} \\ a_{I} \end{pmatrix}$ to be the 2D real vector corresponding to the real and imaginary parts of the 1D complex vector. Similarly, $\vec{b} = \begin{pmatrix} b_{R} \\ b_{I} \end{pmatrix}$.

We can see that the real part of the 1D complex inner product is just $\vec{b} \cdot \vec{a} = |\vec{a}||\vec{b}|cos(\theta)$, the normal inner product defined on the 2 dimensional real vector space. This is the $(b_{R}a_{R} + b_{I}a_{I})$ term.

But what about the imaginary part?

We could write it like:

$\begin{vmatrix} b_{R} & b_{I} \\ a_{R} & a_{I} \end{vmatrix} = b_{R}a_{I} - b_{I}a_{R}$

We make a matrix with the real vectors $\vec{b}$ and $\vec{a}$ as rows, and find the determinant. This gives us the imaginary part of the complex inner product.

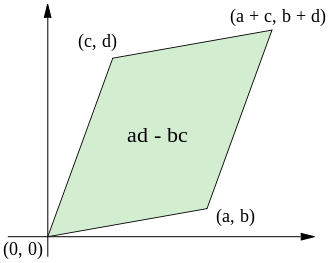

It happens that the determinant $\begin{vmatrix} a & b \\ c & d \end{vmatrix}$ is the signed area of the parallelogram with $\begin{pmatrix} a \\ b \end{pmatrix}$ and $\begin{pmatrix} c \\ d \end{pmatrix}$ as sides.

So: if we have a 1D complex vector space ($x+iy$), we can instead work with a 2D real vector space $\begin{pmatrix} x \\ y \end{pmatrix}$. We can just work with the complex numbers as "arrows in 2D." The inner product between the 2D arrows gives the real part of $\langle b \mid a \rangle$, and the area of the parallelogram formed by the 2D arrows gives the imaginary part of $\langle b \mid a \rangle$.

This generalizes to N-dim complex vector spaces. We can instead work with a 2N-dimensional real vector space. The real part of $\langle b \mid a \rangle$ is given by the sum of all the inner products between the 2D arrows representing the components, and the imaginary part is the sum of all the areas between the 2D arrows representing the components. The imaginary part of the inner product is called a "symplectic" form, an idea which we shall return to.

Alternatively, we could describe $b_{R}a_{I} - b_{I}a_{R}$ as $\begin{pmatrix} b_{R} & b_{I} \end{pmatrix} \begin{pmatrix} a_{I} \\ -a_{R} \end{pmatrix}$.

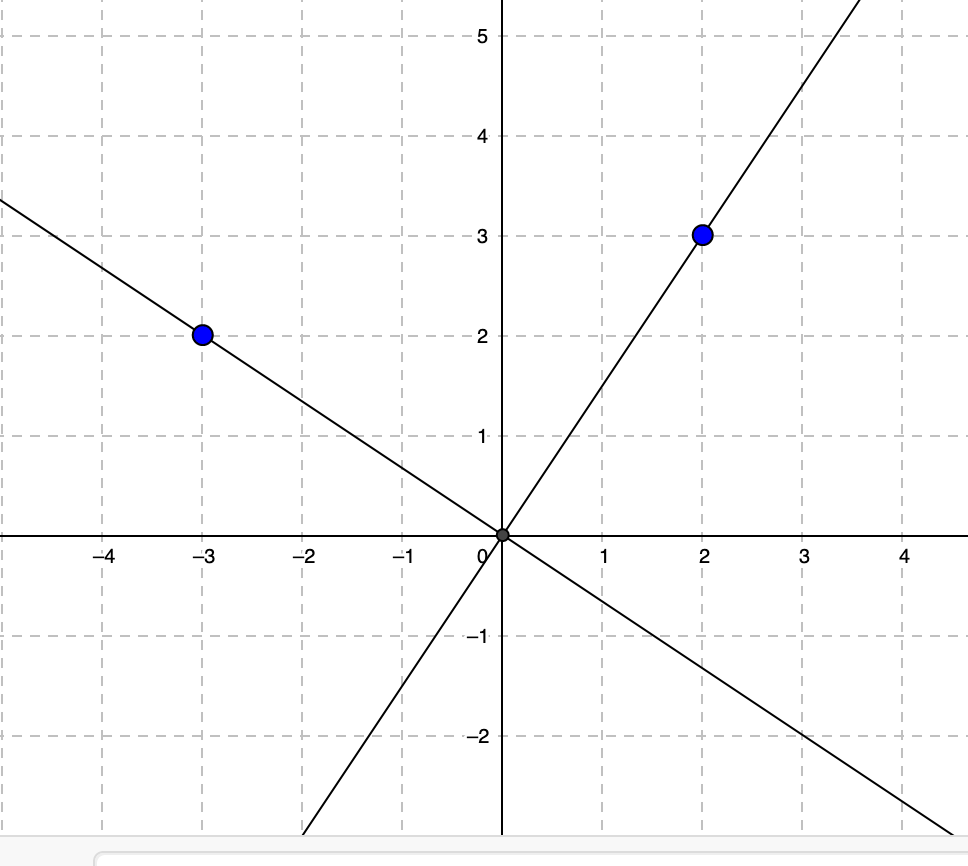

If you have a 2D vector $\begin{pmatrix} x \\ y \end{pmatrix}$, then $\begin{pmatrix} y \\ -x \end{pmatrix}$ is the former rotated 90 degrees. The arrow to (3,2) is orthogonal to the arrow (2, -3).

So instead of finding the area of the parallelogram formed by $\vec{b}$ and $\vec{a}$, we could rotate $\vec{a}$ 90 degrees, and take the inner product with $\vec{b}$, and get the same answer. And this generalizes as well: we can choose some higher dimensional 90 degree rotation on the 2N real vector space to define the imaginary part of the complex inner product. In other words, $\langle b \mid a \rangle = (\vec{b} \cdot \vec{a}) + i(\vec{b} \cdot \vec{a}_{\perp})$

Two 2D vectors at right angles (say, (1,0) and (0,1)) will have a complex inner product of $0 + i$. Two parallel 2D vectors (say (1,0) and (1,0)) will have a complex inner product of $1 + 0i$. In this case, the complex inner product can only be totally 0 if one of the vectors is (0,0). But once you have an N-dim complex vector space, the vectors can be complex orthogonal to each other: orthogonal to two orthogonal vectors, a line perpendicular to a plane. There is something three-dimensional about the complex inner product.

(If a 90 degree rotation in very high dimensional space seems abstract, just consider, say, for 2D complex, 4D real: $(a, b, c, d) \cdot (b, -a, d, -c) = 0$: just swap and negate.)

Eventually, we'll interpret the complex inner product to represent a probability amplitude. And we'll also show how this notion is intimately tied up with yet more interesting geometry.

Let's look at an example of a complex vector space, really the simplest interesting one: two dimensions.

We've already alluded to the fact that we can treat $\mathbb{C} + \infty$ as a complex projective space: aka the complex projective line. It's just the real projective line (back from our rational number days) complexified! In other words, it's a sphere.

Given some $\alpha$ which can be a complex number or infinity, we can represent it as a complex 2D vector as:

$\alpha \rightarrow \begin{pmatrix} 1 \\ \alpha \end{pmatrix}$ or $\begin{pmatrix} 0 \\ 1 \end{pmatrix}$ if $\alpha = \infty$.

In reverse:

$ \begin{pmatrix} a \\ b \end{pmatrix} \rightarrow \frac{b}{a}$ or $\infty$ if $a=0$.

We're free to multiply this complex vector by any complex number and this won't change the point it represents on the sphere. So our complex 2D vector corresponds to a direction in 3D, a length, and a phase. It can therefore represent a classical spinning object: the direction being the axis of rotation, the length being the speed of rotation, and the phase being how far it's turned around the axis at a given moment. It could also represent an incoming classical photon, which is specified by a 3D direction, with a certain color (length), and polarization (phase). Generally, unnormalized 2D complex vectors are referred to as spinors.

If we normalize the vector so its length is 1, we can view it as the state of a qubit: for example, the spin angular momentum of a spin-$\frac{1}{2}$ quantum particle. In that case, the rotation axis picked out can only be inferred as a statistical average. Looking ahead: supposing we've quantized along the Z-axis, then $aa^{*}$ is the probability of measuring this qubit to be $\uparrow$ along the Z direction, and $bb^{*}$ is the probability of measuring it to be $\downarrow$. Here, "quantized along the Z-axis" just means that we take eigenstates of the Pauli Z operator to be our basis states (so that each component of our complex vector weights one of those eigenstates). The point I want to make here is that the normalization condition is necessary for us to be able to interpret $|a|^{2}$ as a probability.

It's worth noting that if we take the normalization condition, $aa^{*} + bb^{*} = 1$, and expand out the complex and imaginary parts of each, we get: $\alpha^{2} + \beta^{2} + \gamma^{2} + \delta^{2} = 1$. If $\alpha^{2} + \beta^{2} = 1$ is a point on a circle, and $\alpha^{2} + \beta^{2} + \gamma^{2} = 1$ is a point on a sphere, then $\alpha^{2} + \beta^{2} + \gamma^{2} + \delta^{2} = 1$ is a point on a 3-sphere: the 3-sphere lives in four dimensional space, and just as a circle is a 1D space that loops back on itself, so that if you go too far in one direction, you come out in the opposite direction, and the sphere is a 2D space that loops around, you can think of the 3-sphere as a 3D space that if you go forever in one direction, you loop back around. But we also know from the above, that we can break down a point on a 3-sphere as: a point on a 2-sphere, plus a complex phase, a point on the circle. This is known as the "Hopf fibration." The idea is that you can think of the 2-sphere as a "base space" with a little "fiber" (the phase) living above each point. There's actually 4 of these (non-trivial) fibrations, corresponding to the 4 division algebras. The 1-sphere breaks into 1-sphere with a 0-sphere (just two equidistant points!) at each point (related to the reals). The 3-sphere breaks into the 2-sphere with a 1-sphere at each point (related to the complex numbers). The 7-sphere breaks into a 4-sphere with a 3-sphere living at each point (related to the quaternions). And a 15-sphere breaks into an 8-sphere with a 7-sphere living at each point (related to the octonians). Others can go into more detail (such as John Baez)! (There's an interesting connection in the latter two cases between the entanglement between two and three qubits.)

Let's play a little game. On the Riemann sphere, the 6 cardinal points are: $\{1, -1, i, -i, 0, \infty\}$. Let's translate them into spinors.

$ 1 \rightarrow \begin{pmatrix} 1 \\ 1 \end{pmatrix} $, $ -1 \rightarrow \begin{pmatrix} 1 \\ -1 \end{pmatrix} $

$ i \rightarrow \begin{pmatrix} 1 \\ i \end{pmatrix} $, $ -i \rightarrow \begin{pmatrix} 1 \\ -i \end{pmatrix} $

$ 0 \rightarrow \begin{pmatrix} 1 \\ 0 \end{pmatrix} $, $ \infty \rightarrow \begin{pmatrix} 0 \\ 1 \end{pmatrix} $

Notice that these pairs of vectors, which represent antipodal points on the sphere, are in fact: complex orthogonal.

$ \begin{pmatrix} 1 & 1 \end{pmatrix} \begin{pmatrix} 1 \\ -1 \end{pmatrix} = 0$

$ \begin{pmatrix} 1 & -i \end{pmatrix} \begin{pmatrix} 1 \\ -i \end{pmatrix} = 0$

$ \begin{pmatrix} 1 & 0 \end{pmatrix} \begin{pmatrix} 0 \\ 1 \end{pmatrix} = 0$

Now here's something implict in what we've already said:

If you have a set of eigenvalues and eigenvectors, you can work backwards to a matrix representation by first forming a matrix whose columns are the eigenvectors. Call it $C$. Take its inverse: $C^{-1}$. And then construct $D$, a matrix with the eigenvalues along the diagonal. The desired matrix: $M = CDC^{-1}$.

But first recall that to find the inverse of a 2x2 matrix $A = \begin{pmatrix} a & b \\ c & d \end{pmatrix}$: $A^{-1} = \frac{1}{det(A)} \begin{pmatrix} d & -b \\ -c & a \end{pmatrix}$.

For example:

$C = \begin{pmatrix} 1 & 1 \\ 1 & -1 \end{pmatrix}$, $C^{-1} = -\frac{1}{2} \begin{pmatrix} -1 & -1 \\ -1 & 1 \end{pmatrix}$, $D = \begin{pmatrix} 1 & 0 \\ 0 & -1 \end{pmatrix}$

$X = CDC^{-1} = \begin{pmatrix} 0 & 1 \\ 1 & 0 \end{pmatrix}$

$C = \begin{pmatrix} 1 & 1 \\ i & -i \end{pmatrix}$, $C^{-1} = \frac{i}{2} \begin{pmatrix} -i & -1 \\ -i & 1 \end{pmatrix}$, $D = \begin{pmatrix} 1 & 0 \\ 0 & -1 \end{pmatrix}$

$Y = CDC^{-1} = \begin{pmatrix} 0 & -i \\ i & 0 \end{pmatrix}$

$C = \begin{pmatrix} 1 & 0 \\ 0 & 1 \end{pmatrix}$, $C^{-1} = \begin{pmatrix} 1 & 0 \\ 0 & 1 \end{pmatrix}$, $D = \begin{pmatrix} 1 & 0 \\ 0 & -1 \end{pmatrix}$

$Z = CDC^{-1} = \begin{pmatrix} 1 & 0 \\ 0 & -1 \end{pmatrix}$

import numpy as np

import qutip as qt

def LV_M(L, V):

to_ = np.array(V).T

from_ = np.linalg.inv(to_)

D = np.diag(L)

return qt.Qobj(np.dot(to_, np.dot(D, from_)))

Xp = np.array([1, 1])

Xm = np.array([1, -1])

X = LV_M([1, -1], [Xp, Xm])

Yp = np.array([1, 1j])

Ym = np.array([1, -1j])

Y = LV_M([1, -1], [Yp, Ym])

Zp = np.array([1, 0])

Zm = np.array([0, 1])

Z = LV_M([1, -1], [Zp, Zm])

print(X, qt.sigmax())

print(Y, qt.sigmay())

print(Z, qt.sigmaz())

These are the famous Pauli matrices. We can see that X+ and X- are eigenvectors of the Pauli X matrix, and when it acts on them, it multiplies X+ by 1, and X- by -1. Similarly, for Pauli Y and Z.

One nice thing about them is we have a new way of extracting the (x, y, z) coordinate of a spinor $\psi$. Instead of getting the ratio of the components and stereographically projecting to the sphere, we can form:

$(\langle \psi \mid X \mid \psi \rangle, \langle \psi \mid Y \mid \psi \rangle, \langle \psi \mid Z \mid \psi \rangle)$

(You could think of $\langle \psi \mid X \mid \psi \rangle$ as an inner product where the metric is given by Pauli X.)

Another way of thinking about it is as an expectation value. In statistics, an expectation value, for example, one's expected "winnings," is just: a sum over all the rewards/losses weighted by the probability of the outcome. As we said before, if we have normalized complex vectors, we can interpret the inner product $\langle \phi \mid \psi \rangle = \alpha$ as a "probability amplitude"-- the corresponding probability is $|\langle \phi \mid \psi \rangle|^{2} = \alpha^{*}\alpha$, in other words, the area of the square on the hypoteneuse of the complex number as 2D arrow.

It turns out we can rewrite things like this:

$\langle \psi \mid X \mid \psi \rangle = \sum_{i} \lambda_{i} |\langle v_{i} \mid \psi \rangle|^{2} $

We get the probabilities that $\psi$ is in each of the eigenstates, and multiply them by the corresponding eigenvalues (which is like the "reward/loss"), and sum it all up. And so, we can interpret the (x, y, z) coordinates of our point in a statistical sense, as expectation values.

Indeed, the Pauli matrices are Hermitian: meaning they are equal to their own conjugate transpose, and they have real eigenvalues and a complete set of orthogonal eigenvectors: and so this statistical interpretation works (we'll never get an imaginary expectation value, for example).

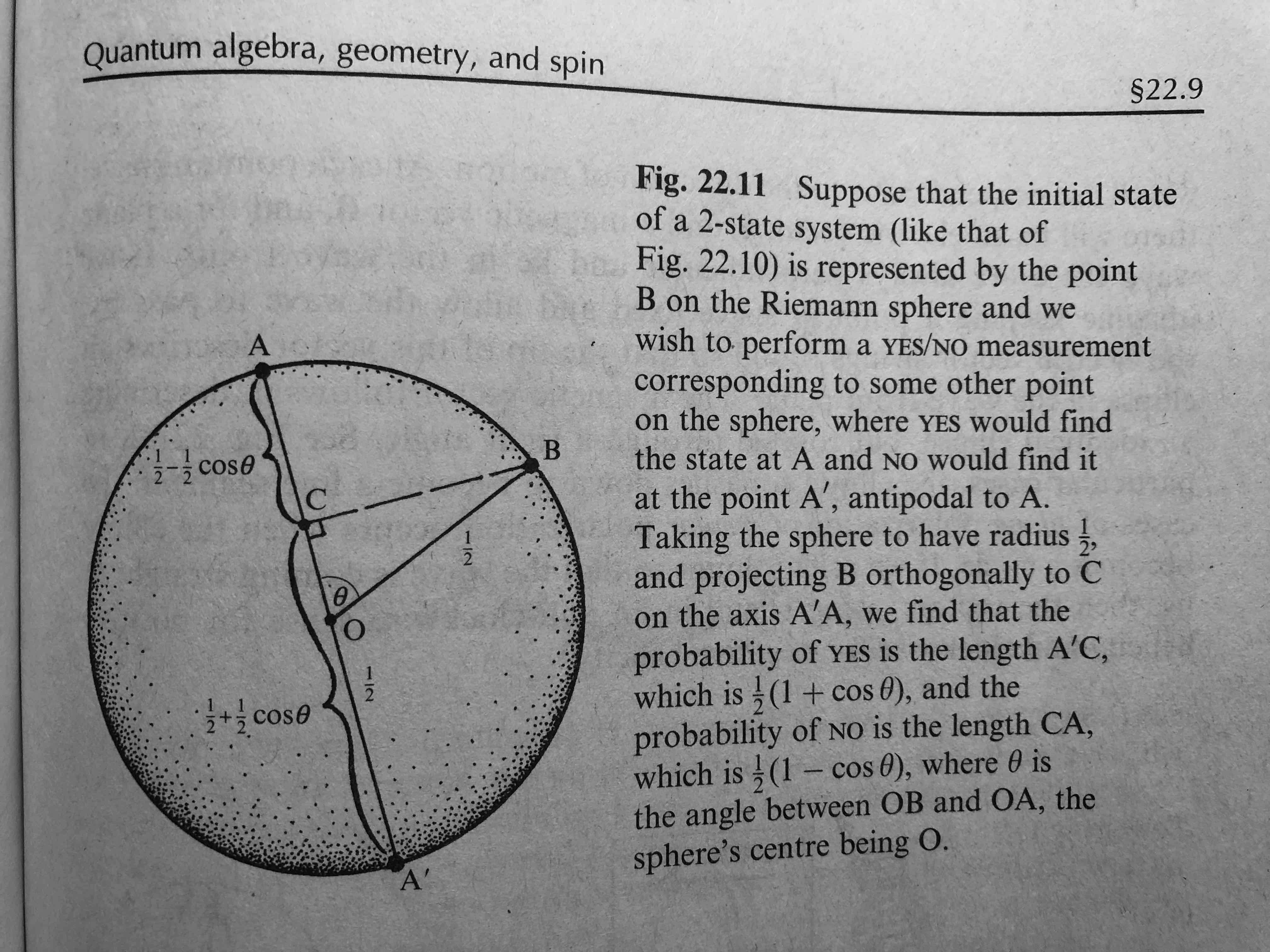

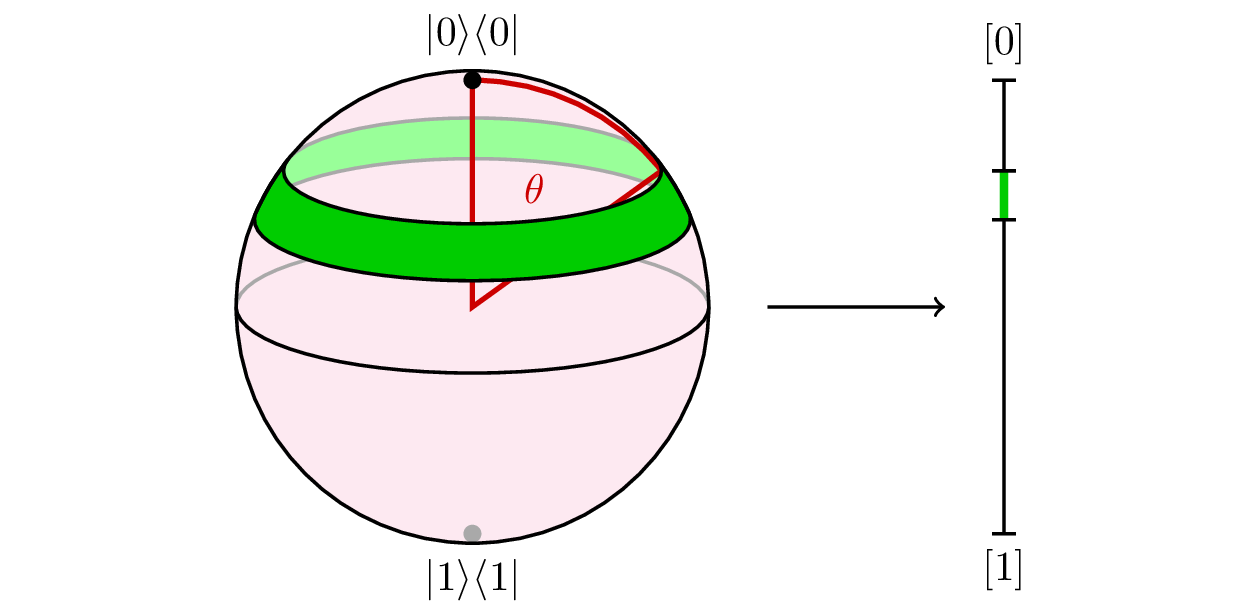

Here's a more geometrical way of thinking about it. We could choose any two antipodal points on the sphere and form a Hermitian matrix with eigenvalues ${1, -1}$ out of them. This defines an oriented axis. Suppose you have a point on the sphere representing $\psi$. Project that point perpendicularly onto the axis defined by the Hermitian matrix. This divides that line segment into two pieces.

Imagine that the line is like an elastic band that snaps randomly at some location, and then the two ends are dragged to the two antipodal points, carrying the projected point with it. The projected point will end up at one or other of the two antipodes with probabilites $|\langle v_{1}\mid\psi\rangle|^{2} = \frac{1 + \vec{\psi} \cdot \vec{v_{1}}}{2}$ and $|\langle v_{-1}\mid\psi\rangle|^{2} = \frac{1 + \vec{\psi} \cdot \vec{v_{-1}}}{2}$.

And so the expectation value will be: the coordinate of that point in the chosen direction with (0,0,0) being the origin.

$ (1)\frac{1 + \vec{\psi} \cdot \vec{v_{1}}}{2} + (-1)\frac{1 + \vec{\psi} \cdot \vec{v_{-1}}}{2} = \frac{1}{2} ( \vec{\psi} \cdot \vec{v_{1}} - \vec{\psi} \cdot \vec{v_{-1}} ) = \frac{1}{2} ( \psi \cdot ( \vec{v_{1}} - \vec{v_{-1}} ))$.

But we know that $\vec{v_{1}}$ and $\vec{v_{-1}}$ are antipodal $\vec{v_{-1}} = -\vec{v_{1}}$: , so: $\frac{1}{2} ( \vec{\psi} \cdot ( 2\vec{v_{1}}))$ = $\vec{\psi} \cdot v_{1}$, which is indeed: the coordinate of the (x, y, z) vector $ \vec{\psi}$ in the chosen direction.

Archimedes's tombstone famously was a sphere inscribed in a cylinder.

What he had discovered is that cylindrical projection preserves area.

We've already discussed the stereographic projection from the sphere to the plane which is conformal, or "angle preserving." Cylindrical projection, in contrast, projects each point on the sphere outward onto a surrounding cylinder, and preserves area.

This amounts to what we've just been doing:

Probability amplitudes live in sphere-world, while probabilities live on the line. And here's the connection: if we choose a point uniformly randomly on the sphere, we are as much choosing a point uniformly randomly on the line: since the cylindrical projection preserves area or "measure" (up to some constant factor). (That this works for higher dimesional spheres and simplices isn't trivial and underlies the general interpretation of complex vectors as furnishing probability amplitudes!) Cf. this. It's all about the relationship between the 1-norm of probabilities: $1 = a + b + c$, and the 2-norm of amplitudes: $1 = |\alpha|^{2} + |\beta|^{2} + |\gamma|^{2}$.

Matrices themselves actually form vector spaces, and naturally the Pauli matrices are orthogonal from this perspective. The inner product that's relevant is: $tr(B^{\dagger}A)$.

For example, $ tr(\begin{pmatrix} 0 & -i \\ i & 0 \end{pmatrix}\begin{pmatrix} 0 & 1 \\ 1 & 0 \end{pmatrix}) = tr(\begin{pmatrix} -i & 0 \\ 0 & i \end{pmatrix}) = 0$.

In fact, any 2x2 Hermitian matrix can be written as a real linear combination of Pauli's: $tI + xX + yY + zZ$: the Pauli matrices form a basis. And so it's easy to write the Hermitian matrix corresponding to any axis.

Moreover, we can use the Pauli's to do rotations.

We already know about the famous expression for the exponential function:

$e^{x} = \sum_{n=0}^{\infty} \frac{x^{n}}{n!} = \frac{1}{0!} + \frac{x}{1!} + \frac{x^{2}}{2!} + \frac{x^{3}}{3!} + \frac{x^{4}}{4!} + \dots$

Well, why not try plugging a matrix into it instead of a scalar?

$e^{X} = \sum_{n=0}^{\infty} \frac{X^{n}}{n!} = \frac{I}{0!} + \frac{X}{1!} + \frac{X^{2}}{2!} + \frac{X^{3}}{3!} + \frac{X^{4}}{4!} + \dots$, where the powers are interpreted as $X$ matrix multiplied by itself $n$ times.

Here's a way to think about this. We find the eigenvalues and eigenvectors of $X$ and diagonalize it with a basis transformation. For a diagonal matrix: $\begin{pmatrix} \lambda_{0} & 0 \\ 0 & \lambda_{1} \end{pmatrix}$, where the $\lambda$'s are the eigenvalues, $e^{\begin{pmatrix} \lambda_{0} & 0 \\ 0 & \lambda_{1} \end{pmatrix}}$ is just the naive: $\begin{pmatrix} e^{\lambda_{0}} & 0 \\ 0 & e^{\lambda_{1}} \end{pmatrix}$. Then use the inverse transformation to return to the standard basis. Now you have your matrix exponential.

So what does $e^{Xt}$ do? It implements a boost in the X direction on a spinor.

Before we stuck an $i$ in the exponential and got: a parameterization of 2D rotations: $e^{i\theta} = cos(\theta) + i sin(\theta)$. As $\theta$ goes on, $e^{i\theta}$ winds around the circle, tracing out a cosine along the x-axis and a sine along the y-axis.

Similarly, if we stick an $i$ in the matrix exponential and use our Pauli's, we can get a 3D rotation. For example: $e^{-iX\frac{\theta}{2}}$ implements a 3D rotation around the X axis by an angle $\theta$. $e^{-iY\frac{\theta}{2}}$ similarly rotates around the Y-axis. The minus sign is conventional, and the $\frac{1}{2}$ is there because SU(2) is the double cover of SO(3) (the group of normal 3D rotations), but we'll leave it to the side for now, but shows up in the way that the overall phase rotates during a rotation of the sphere. As it well known, a spinor rotated a full turn goes to the negative of itself, and requires two full turns to come back to itself completely. We'll return to this later.

These rotation matrices are unitary matrices: they preserve the norms of vectors. (And in fact they are special unitary matrices.) If you exponentiate a Hermitian matrix, you get a unitary matrix: if you think about a Hermitian matrix as a (perhaps multidimensional) pole, then the unitaries are like rotations around that pole. Another interpretation, statistically: as rotations preserve the length of a vector, unitary matrices preserve the fact that probabilities sum to 1.

Indeed, $e^{iHt}$ turns out to be the general form of time evolution in quantum mechanics. $\psi(x, t) = e^{iHt}\psi(x,0)$, where $H$ is the operator representing the energy, the Hamiltonian, is just another way of writing the Schrodinger equation: $\frac{d\psi(x,t)}{dt} = -iH\psi(x, t)$.

The rotation matrices form a representation of the group $SU(2)$, which is an infinite continuous symmetry group. For each element in the group, there is a corresponding matrix. This is how representation theory works: finding a certain group of interest to be a subgroup of the a matrix group, and better yet if the representation is unitary because then you can do quantum mechanics!

The Pauli matrices are generators for $SU(2)$. And you can think about $SU(2)$ as itself being a manifold, a kind of space, where each point in the space is a different matrix, and (to simpify things) the generators tell you how to go in different directions in this space, like a tangent space.

Elements of $SU(2)$ can be written as some $tI + ixX + iyY + izZ$, and we can can construct elements of a one-parameter subgroup with $e^{iHt}$, where $t$ is the parameter, and $H$ is a 2x2 Hermitian matrix. In other words, we can implement rotations.

If we put rotations and boosts together, we get $SL(2, \mathbb{C})$, the Lorentz group in disguise. And in fact, they are nothing other than the Mobius transformations from before.

Indeed: given a Mobius transformation $f(z) = \frac{az + b}{cz + d}$ with $ad - bc \neq 0$ acting on $\mathbb{C} + \infty$, we can make the $SL(2, \mathbb{C})$ matrix $\begin{pmatrix} a & b \\ c & d \end{pmatrix}$ with $det = 1$ , which, as we know, are generated by the Pauli matrices: $X, Y, Z$ for boosts, and $iX, iY, iZ$ for rotations (6 generators in all)-- and act on spinors (and on matrices too for that metter ). So there you go.

Because they have determinant 1, they preserve the Lorentz metric. Matrices with determinant 1 preserve the determinant of matrix they act upon. One forms the Hermitian matrix: $H = tI + xX + yY + zZ = \begin{pmatrix}t+z & x-iy \\ x+iy & t-z \end{pmatrix}$. The determinant of this matrix is $ (t+z)(t-z) - (x-iy)(x+iy) = t^{2} - z^{2} - y^{2} - z^{2}$, and so when acted upon $M^{\dagger}HM$ where $M$ is an $SL(2,\mathbb{C}))$ matrix, the Lorentz norm is preserved.

But let's return to polynomials for a moment with the aim of interpreting n-dimensional complex vectors in one very useful way.

First, I want to point out that moving from $\mathbb{C} + \infty$ aka $\mathbb{CP}^{1}$ to $\mathbb{C}^{2}$ is just like considering "a root" vs a "monomial."

Suppose we have a polynomial with a single root, a monomial: $f(z) = c_{1}z + c_{0}$. Let's solve it:

$ 0 = c_{1}z + c_{0}$

$ z = -\frac{c_{0}}{c_{1}} $

Indeed, we could have written $f(z) = (z + c_{0}/c_{1})$. The point is that the monomial $f(z) = c_{1}z + c_{0}$ can be identified with its root up to multiplication by any complex number--just like our complex projective vector!

If we had some complex number $\alpha$, which we upgraded to a complex projective vector, we could turn it into a monomial by remembering about that negative sign:

$\alpha \rightarrow \begin{pmatrix} 1 \\ \alpha \end{pmatrix} \rightarrow f(z) = 1z -\alpha $

$\begin{pmatrix} c_{1} \\ c_{0} \end{pmatrix} \rightarrow f(z) = c_{1}z - c_{0} \rightarrow \frac{c_{0}}{c_{1}} $

But what if $\alpha = \infty$? We suggested that this should get mapped to the vector $\begin{pmatrix} 0 \\ 1 \end{pmatrix}$.

Interpreted as a monomial this says that $f(z) = 0z - 1 = -1 \neq 0$. Which has no roots! Indeed, we've reduced the polynomial by a degree: from degree 1 to degree 0. However, it makes a lot of sense to interpret this polynomial as having a root at $\infty$.

And this works in the higher dimensional case too. Suppose we fix the dimension of our vector space to be 4. So we have all the polynomials: $az^{3} + bz^{2} + cz^{1} + d$, but what if $a$ and $b$ were both 0? The polynomial in itself doesn't remember how many higher powers there could have been. So we add two roots at infinity to keep track of things.

So we have a rule: if we lose a degree, we add a root at $\infty$.

There's a more systematic way to deal with this. We homogenize our polynomial. In other words, we add a second variable so that each term in the resulting two-variable polynomial has the same degree. One effect that this has is that if you plug 0 into all the variables then the polynomial evaluates to 0: in the previous case, if there was a constant term, $f(0)$ couldn't be $0$.

So, we want to do something like:

$f(z) = c_{1}z + c_{0} \rightarrow f(w, z) = c_{1}z + c_{0}w$

Suppose we want $f(w, z)$ to have a root $\begin{pmatrix} 1 \\ 0 \end{pmatrix}$. In other words, we want $f(1, 0) = 0$. Then we should pick $f(w, z) = 1z + 0w = z$, which has a root when $z=0$ and $w$ is anything. If we want $f(w, z)$ to have a root $\begin{pmatrix} 0 \\ 1 \end{pmatrix}$, aka $f(0, 1) = 0$, then $f(w, z) = 0z + 1w = w$, which has a root when $w=0$ and $z$ is anything. So we have it now that a $z$-root lives at the North Pole, and a $w$-root lives at the South Pole.

We also have to consider the sign. If we want $f(w, z)$ to have a root $\begin{pmatrix} 2 \\ 3 \end{pmatrix}$, aka $f(2, 3) = 0$, then we want $f(w, z) = 2z - 3w$, so that $f(2, 3) = 2(3) - 3(2) = 0$.

So the correct homogenous polynomial with root $\begin{pmatrix} c_{1} \\ c_{0} \end{pmatrix}$ is $f(w, z) = c_{1}z - c_{0}w$. Or the other way around, if we have $f(w, z) = c_{1}z + c_{0}w$, then its root will be $\begin{pmatrix} c_{1} \\ -c_{0} \end{pmatrix}$, up to overall sign.

The representation of a qubit as a point on the sphere (plus phase) often goes by the name: the Bloch sphere representation. Using the above construction, we can generalize this into the Majorana sphere, which can represent any spin-$j$ state, not just a spin-$\frac{1}{2}$ state, as a constellation of points on the sphere. In other words, we can generalize the above interpretation in terms of the sphere to n-dimensional complex vectors--we'll return to the physical interpretation momentarily. The crucial fact is that there is a representation of Pauli X, Y, and Z on complex vector spaces of any dimension.

If a degree 1 polynomial represents a spin-$\frac{1}{2}$ state (with a corresponding 2D complex vector), a degree 2 polynomial represents a spin-$1$ state (with a corresponding 3D complex vector), a degree 3 polynomial represents a spin-$\frac{3}{2}$ state (with a corresponding 4D complex vector), and so on.

Considering the roots, instead of the coefficients, this is saying that up to a complex phase, a spin-$\frac{1}{2}$ state can be identified with a point on the sphere, a spin-$1$ state with two points on the sphere, a spin-$\frac{3}{2}$ state with three points on the sphere. And the Pauli matrices of that dimensionality generate rotations of the whole constellation.

In other words, somewhat magically, we can take a normalized spin-$2j$ state, which lives in $2j+1$ complex dimensions ($4j+2)$ real dimensions), and which therefore represents a point on a $(4j+1)$-sphere, as a unique constellation of $2j$ points on the $2$-sphere (plus phase)!

And all we're really doing is just finding the roots of a polynomial.

This construction is due to Ettore Majorana, and is often known as the "stellar representation" of spin, and the points on the sphere are often referred to as "stars." The theory of quantum spin, therefore, becomes in many ways the theory of "constellations on the sphere."

So far example, we could have a degree 2 polynomial. For simplicity, let's consider one that has two roots at $\begin{pmatrix} 2 \\ 3 \end{pmatrix}$.

We want: $f(w,z) = (2z - 3w)^2 = (2z - 3w)(2z - 3w) = 4z^2 - 12zw + 9w^2$.

If we want a polynomial with two roots at $\begin{pmatrix} 1 \\ 0 \end{pmatrix}$, we want $f(w, z) = z^2$. If we want a polynomial with two roots at $\begin{pmatrix} 0 \\ 1 \end{pmatrix}$, we want $f(w, z) = w^2$. If we want a polynomial with one root at $\begin{pmatrix} 1 \\ 0 \end{pmatrix}$ and one root at $\begin{pmatrix} 0 \\ 1 \end{pmatrix}$, we want $f(w, z) = zw$.

Indeed, we can use these last three as basis states. Let's take a look:

$ \begin{array}{ |c|c|c| } \hline z^{2} & z & 1 \\ \hline z^{2} & zw & w^{2} & & homogeneous \ roots & roots \\ \hline \hline 1 & 0 & 0 & \rightarrow f(w, z) = z^{2} = 0 & \{ \begin{pmatrix} 1 \\ 0 \end{pmatrix}, \begin{pmatrix} 1 \\ 0 \end{pmatrix} \} & \{ 0, 0 \}\\ 0 & 1 & 0 & \rightarrow f(w, z) = zw = 0 & \{\begin{pmatrix} 1 \\ 0 \end{pmatrix}, \begin{pmatrix} 0 \\ 1 \end{pmatrix} \} & \{ 0, \infty \}\\ 0 & 0 & 1 & \rightarrow f(w, z) = w^{2} = 0 & \{ \begin{pmatrix} 0 \\ 1 \end{pmatrix}, \begin{pmatrix} 0 \\ 1 \end{pmatrix}\} & \{ \infty, \infty\}\\ \hline \end{array} $

So we have three basis states $z^{2}$, $zw$, and $w^{2}$, and they correspond to three constellations, albeit simple ones. The first constellation has two stars at the North Pole. The second has one star at the North Pole and one star at the South Pole. And the third has two stars at the South Pole.

Now the interesting fact is that any 2-star constellation can be written as a complex superposition of these three constellations. We just add up the three monomials, each weighted by some complex factor, and get a polynomial. The stars are just given by the roots of the homogenous polynomial (or the single variable polynomial with the rule about roots at infinity.)

There's one more subtlety which we need to take into account in order to be consistent with how the X, Y, Z Pauli operators are defined (we'll learn how to actually construct the higher dimensional Pauli's later).

Here's the overview:

If we have a spin state in the usual $\mid j, m \rangle$ representation, quantized along the Z-axis, we can express it as a n-dimensional ket, where $n = 2j + 1$. E.g, if j = $\frac{1}{2}$, the dimension of the representation is 2, and the possible $m$ values are $\frac{1}{2}, -\frac{1}{2}$; for j = $1$, the dimension is 3, and the possible $m$ values are $1, 0, -1$, and so on. Again we'll return to the interpretation momentarily.

So we have some spin state: $\begin{pmatrix} a_{0} \\ a_{1} \\ a_{2} \\ \vdots \\ a_{n-1} \end{pmatrix}$

The polynomial whose $2j$ roots correspond to the correct stars, taking into account all the secret negative signs, etc, is given by:

$p(z) = \sum_{m=-j}^{m=j} (-1)^{j+m} \sqrt{\frac{(2j)!}{(j-m)!(j+m)!}} a_{j+m} z^{j-m}$.

$p(z) = \sum_{i=0}^{i=2j} (-1)^{i} \sqrt{\begin{pmatrix} 2j \\ i \end{pmatrix}} a_{i} z^{2j-i}$, where $\begin{pmatrix} n \\ k \end{pmatrix}$ is the binomial coefficient aka $\frac{n!}{k!(n-k)!}$.

Or homogenously, $p(w, z) = \sum_{i=0}^{i=2j} (-1)^{i} \sqrt{\begin{pmatrix} 2j \\ i \end{pmatrix}} a_{i} z^{2j-i} w^{i}$.

This is known as the Majorana polynomial. Why does the binomial coefficient come into play? I'll just note that $\begin{pmatrix} 2j \\ i \end{pmatrix}$ is the number of groupings of $2j$ roots taken 0 at a time, 1 at a time, 2 at a time, 3 at a time, eventually $2j$ at a time. That's just the number of terms in each of Vieta's formulas, which relate the roots to the coefficients! So when we go from a polynomial $\rightarrow$ the $\mid j, m \rangle$ state, we're normalizing each coefficient by the number of terms that contribute to that coefficient.

Let's check this out.

We'll look at the eigenstates of the X, Y, Z operators for a given spin-$j$. X, Y, Z goes from left to right, and the vertical placement of each sphere is given by its $m$ value. Try changing the $j$ value!

import numpy as np

import qutip as qt

import vpython as vp

np.set_printoptions(precision=3)

scene = vp.canvas(background=vp.color.white)

##########################################################################################

# from the south pole

def c_xyz(c):

if c == float("Inf"):

return np.array([0,0,-1])

else:

x, y = c.real, c.imag

return np.array([2*x/(1 + x**2 + y**2),\

2*y/(1 + x**2 + y**2),\

(1-x**2-y**2)/(1 + x**2 + y**2)])

# np.roots takes: p[0] * x**n + p[1] * x**(n-1) + ... + p[n-1]*x + p[n]

def poly_roots(poly):

head_zeros = 0

for c in poly:

if c == 0:

head_zeros += 1

else:

break

return [float("Inf")]*head_zeros + [complex(root) for root in np.roots(poly)]

def spin_poly(spin):

j = (spin.shape[0]-1)/2.

v = spin

poly = []

for m in np.arange(-j, j+1, 1):

i = int(m+j)

poly.append(v[i]*\

(((-1)**(i))*np.sqrt(np.math.factorial(2*j)/\

(np.math.factorial(j-m)*np.math.factorial(j+m)))))

return poly

def spin_XYZ(spin):

return [c_xyz(root) for root in poly_roots(spin_poly(spin))]

##########################################################################################

def display(spin, where):

j = (spin.shape[0]-1)/2

vsphere = vp.sphere(color=vp.color.blue,\

opacity=0.5,\

pos=where)

vstars = [vp.sphere(emissive=True,\

radius=0.3,\

pos=vsphere.pos+vp.vector(*xyz))\

for i, xyz in enumerate(spin_XYZ(spin.full().T[0]))]

varrow = vp.arrow(pos=vsphere.pos,\

axis=vp.vector(qt.expect(qt.jmat(j, 'x'), spin),\

qt.expect(qt.jmat(j, 'y'), spin),\

qt.expect(qt.jmat(j, 'z'), spin)))

return vsphere, vstars, varrow

##########################################################################################

j = 3/2

XYZ = {"X": qt.jmat(j, 'x'),\

"Y": qt.jmat(j, 'y'),\

"Z": qt.jmat(j, 'z')}

for i, o in enumerate(["X", "Y", "Z"]):

L, V = XYZ[o].eigenstates()

for j, v in enumerate(V):

display(v, vp.vector(3*i,3*L[j],0))

spin = v.full().T[0]

print("%s(%.2f):" % (o, L[j]))

print("\t|j, m> = %s" % spin)

poly_str = "".join(["(%.1f+%.1fi)z^%d + " % (c.real, c.imag, len(spin)-k-1) for k, c in enumerate(spin_poly(spin))])

print("\tpoly = %s" % poly_str[:-2])

print("\troots = ")

for root in poly_roots(spin_poly(spin)):

print("\t %s" % root)

print()

So we can see that the eigenstates of the X, Y, Z operators for a given spin-$j$ representation correspond to constellations with $2j$ stars, and there are $2j+1$ such constellations, one for each eigenstate, and they correspond to the following simple constellations:

For example, if $j=\frac{3}{2}$ and we're interested in the Y eigenstates: 3 stars at Y+, 0 stars at Y-; 2 stars at Y+, 1 stars at Y-; 1 star at Y+, 2 stars at Y-; 0 stars at Y+, 3 stars at Y-.

We could choose X, Y, Z or some combination thereof to be the axis we quantize along, but generally we'll choose the Z axis.

The remarkable fact is that any constellation of $2j$ stars can be written as a superposition of these basic constellations. We simply find the roots of the corresponding polynomial.

Finally, let's check our "group equivariance."

In other words, we treat our function $f(w, z)$ as a function that takes a spinor/qubit/2d complex projective vector/spin-$\frac{1}{2}$ state as input. So we'll write $f(\psi_{little})$, and it's understood that we plug the first component of $\psi_{little}$ in for $w$ and the second component in for $z$. And we'll say $f(\psi_{little}) \Rightarrow \psi_{big}$, where $\psi_{big}$ is the corresponding $\mid j, m \rangle$ state of our spin-$j$ . If $\psi_{little}$ is a root, then if we rotate it around some axis, and also rotate $\psi_{big}$ the same amount around the same axis, then $U_{little}\psi_{little}$ should be a root of $U_{big}\psi_{big}$.

import numpy as np

import qutip as qt

import vpython as vp

scene = vp.canvas(background=vp.color.white)

##########################################################################################

# from the south pole

def c_xyz(c):

if c == float("Inf"):

return np.array([0,0,-1])

else:

x, y = c.real, c.imag

return np.array([2*x/(1 + x**2 + y**2),\

2*y/(1 + x**2 + y**2),\

(1-x**2-y**2)/(1 + x**2 + y**2)])

# np.roots takes: p[0] * x**n + p[1] * x**(n-1) + ... + p[n-1]*x + p[n]

def poly_roots(poly):

head_zeros = 0

for c in poly:

if c == 0:

head_zeros += 1

else:

break

return [float("Inf")]*head_zeros + [complex(root) for root in np.roots(poly)]

def spin_poly(spin):

j = (spin.shape[0]-1)/2.

v = spin if type(spin) != qt.Qobj else spin.full().T[0]

poly = []

for m in np.arange(-j, j+1, 1):

i = int(m+j)

poly.append(v[i]*\

(((-1)**(i))*np.sqrt(np.math.factorial(2*j)/\

(np.math.factorial(j-m)*np.math.factorial(j+m)))))

return poly

def spin_XYZ(spin):

return [c_xyz(root) for root in poly_roots(spin_poly(spin))]

def spin_homog(spin):

n = spin.shape[0]

print("".join(["(%.2f + %.2fi)z^%dw^%d + " % (c.real, c.imag, n-i-1, i) for i, c in enumerate(spin_poly(spin))])[:-2])

def hom(spinor):

w, z = spinor.full().T[0]

return sum([c*(z**(n-i-1))*(w**(i)) for i, c in enumerate(spin_poly(spin))])

return hom

def c_spinor(c):

if c == float('Inf'):

return qt.Qobj(np.array([0,1]))

else:

return qt.Qobj(np.array([1,c])).unit()

def spin_spinors(spin):

return [c_spinor(root) for root in poly_roots(spin_poly(spin))]

##########################################################################################

j = 3/2

n = int(2*j+1)

spin = qt.rand_ket(n)

print(spin)

h = spin_homog(spin)

spinors = spin_spinors(spin)

for spinor in spinors:

print(h(spinor))

print()

dt = 0.5

littleX = (-1j*qt.jmat(0.5, 'x')*dt).expm()

bigX = (-1j*qt.jmat(j, 'x')*dt).expm()

spinors2 = [littleX*spinor for spinor in spinors]

spin2 = bigX*spin

print(spin2)

h2 = spin_homog(spin2)

for spinor in spinors2:

print(h2(spinor))

Now let's watch it evolve. You can display a random spin-$j$ state and evolve it under some random Hamiltonian (some $2j+1$ by $2j+1$ dim Hermitian matrix) and watch its constellation evolve, the stars swirling around, permuting among themselves, seeming to repel each other like little charged particles. Meanwhile, you can see to the side the Z-basis constellations, and the amplitudes corresponding to them: in other words, the yellow arrows are the components of the spin vector in the Z basis.

And you can check, for instance, that the whole constellation rotates rigidly around the X axis when you evolve the spin state with $e^{iXt}$, for example.

import numpy as np

import qutip as qt

import vpython as vp

scene = vp.canvas(background=vp.color.white)

##########################################################################################

# from the south pole

def c_xyz(c):

if c == float("Inf"):

return np.array([0,0,-1])

else:

x, y = c.real, c.imag

return np.array([2*x/(1 + x**2 + y**2),\

2*y/(1 + x**2 + y**2),\

(1-x**2-y**2)/(1 + x**2 + y**2)])

# np.roots takes: p[0] * x**n + p[1] * x**(n-1) + ... + p[n-1]*x + p[n]

def poly_roots(poly):

head_zeros = 0

for c in poly:

if c == 0:

head_zeros += 1

else:

break

return [float("Inf")]*head_zeros + [complex(root) for root in np.roots(poly)]

def spin_poly(spin):

j = (spin.shape[0]-1)/2.

v = spin

poly = []

for m in np.arange(-j, j+1, 1):

i = int(m+j)

poly.append(v[i]*\

(((-1)**(i))*np.sqrt(np.math.factorial(2*j)/\

(np.math.factorial(j-m)*np.math.factorial(j+m)))))

return poly

def spin_XYZ(spin):

return [c_xyz(root) for root in poly_roots(spin_poly(spin))]

##########################################################################################

def display(spin, where, radius=1):

j = (spin.shape[0]-1)/2

vsphere = vp.sphere(color=vp.color.blue,\

opacity=0.5,\

radius=radius,

pos=where)

vstars = [vp.sphere(emissive=True,\

radius=radius*0.3,\

pos=vsphere.pos+vsphere.radius*vp.vector(*xyz))\

for i, xyz in enumerate(spin_XYZ(spin.full().T[0]))]

varrow = vp.arrow(pos=vsphere.pos,\

axis=vsphere.radius*vp.vector(qt.expect(qt.jmat(j, 'x'), spin),\

qt.expect(qt.jmat(j, 'y'), spin),\

qt.expect(qt.jmat(j, 'z'), spin)))

return vsphere, vstars, varrow

def update(spin, vsphere, vstars, varrow):

j = (spin.shape[0]-1)/2

for i, xyz in enumerate(spin_XYZ(spin.full().T[0])):

vstars[i].pos = vsphere.pos+vsphere.radius*vp.vector(*xyz)

varrow.axis = vsphere.radius*vp.vector(qt.expect(qt.jmat(j, 'x'), spin),\

qt.expect(qt.jmat(j, 'y'), spin),\

qt.expect(qt.jmat(j, 'z'), spin))

return vsphere, vstars, varrow

##########################################################################################

j = 3/2

n = int(2*j+1)

dt = 0.001

XYZ = {"X": qt.jmat(j, 'x'),\

"Y": qt.jmat(j, 'y'),\

"Z": qt.jmat(j, 'z')}

state = qt.rand_ket(n)#qt.basis(n, 0)#

H = qt.rand_herm(n)#qt.jmat(j, 'x')#qt.rand_herm(n)#

U = (-1j*H*dt).expm()

vsphere, vstars, varrow = display(state, vp.vector(0,0,0), radius=2)

ZL, ZV = qt.jmat(j, 'z').eigenstates()

vamps = []

for i, v in enumerate(ZV):

display(v, vp.vector(4, 2*ZL[i], 0), radius=0.5)

amp = state.overlap(v)

vamps.append(vp.arrow(color=vp.color.yellow, pos=vp.vector(3, 2*ZL[i], 0),\

axis=vp.vector(amp.real, amp.imag, 0)))

T = 100000

for t in range(T):

state = U*state

update(state, vsphere, vstars, varrow)

for i, vamp in enumerate(vamps):

amp = state.overlap(ZV[i])

vamp.axis = vp.vector(amp.real, amp.imag, 0)

vp.rate(2000)

It's worth noting: if the state is an eigenstate of the Hamiltonian, then the constellation doesn't change: there's only a phase evolution. Perturbing the state slightly from that eigenstate will cause the stars to precess around their former locations. Further perturbations will eventually cause the stars to begin to swap places, until eventually it because visually unclear which points they'd been precessing around.

Check out perturb_spin.py! Choose a $j$ value, and it'll show you a random constellation evolving under some random Hamiltonian. We also color the stars and keep track of their paths (which is a little tricky since the roots are in themselves unordered). You can clear the trails with "c", toggle them with "t". You can stop and start evolving with "p", get a new random state with "i", and choose a new random Hamiltonian with "o." Use "e" to measure the energy: it'll collapse to an energy eigenstate, and you'll see its fixed constellation. The phase is shown as a yellow arrow above. Finally, use "a/d s/w z/w" to rotate along the X, Y, and Z directions. Use them to perturb the spin out of its energy eigenstate and watch the stars precess. Precession is what spinning objects like to do, like spinning tops, like the rotating earth whose axis precesses over some 26,000 years, swapping the seasons as it goes.

Now you might wonder: in the above simulations, we evolve our state vector by some tiny unitary at each time step, convert it into a polynomial, break it into its roots, and display them as points on the sphere. Is there some way of taking a Hamiltonian and an initial constellation and getting the time evolution of the individual stars themselves? In other words, can we calculate $\dot{\rho}(t) $ for each of the roots $\rho_{i}$?

Here's how:

Suppose we have a polynomial with two roots $f(z) = (z-\alpha)(z-\beta)$. We could consider the roots to be evolving in time: $f(z, t) = (z-\alpha(t))(z-\beta(t))$. Since the roots determine the polynomial (up to a factor), this is a perfectly good way of parameterizing the time evolution of $f(z, t)$. So we get:

$f(z, t) = z^{2} - \alpha(t)z - \beta(t)z + \alpha(t)\beta(t)$.

Let's consider the partial derivative with respect to time, leaving $z$ as a constant.

$\frac{\partial f(z, t)}{\partial t} = -\dot{\alpha}(t)z - \dot{\beta}(t)z + \alpha(t)\dot{\beta}(t) + \dot{\alpha}(t)\beta(t) = \dot{\alpha}(t)\big{(}\beta(t) - z \big{)} + \dot{\beta}(t)\big{(}\alpha(t) - z \big{)}$.

We've used the chain rule which says that $\frac{d x(t)y(t) }{dt} = x(t)\frac{dy(t)}{dt} + \frac{dx(t)}{dt}y(t)$.

Let's also consider the partial derivative with respect to $z$ leaving $t$ as a constant.

$\frac{\partial f(z,t)}{\partial z} = 2z - \alpha(t) - \beta(t)$.

Finally, let's consider the following interesting expression:

$ - \frac{\frac{\partial f(z, t)}{\partial t}}{\frac{\partial f(z,t)}{\partial z}} = \frac{\dot{\alpha}(t)\big{(}z- \beta(t) \big{)} + \dot{\beta}(t)\big{(} z - \alpha(t) \big{)}}{2z - \alpha(t) - \beta(t)}$

Watch what happens when we substitute in $\alpha(t)$ for $z$:

$ - \frac{\frac{\partial f(z, t)}{\partial t}}{\frac{\partial f(z,t)}{\partial z}} \rvert_{z=\alpha(t)} = \frac{\dot{\alpha}(t)\big{(}\alpha(t) - \beta(t)\big{)} + \dot{\beta}(t)\big{(}\alpha(t) - \alpha(t)\big{)}}{2\alpha(t) - \alpha(t) - \beta(t)} = \frac{\dot{\alpha}(t)\big{(}\alpha(t) - \beta(t)\big{)}}{\alpha(t) - \beta(t)} = \dot{\alpha}(t)$

So that the time derivative of any root is given by:

$ \dot{\rho}(t) = - \frac{\partial_{t} f(z, t)}{\partial_{z} f(z,t)} \rvert_{z=\rho(t)} $

In the quantum case, we have the Schrodinger equation: $\partial_{t}f(z, t) = -i\hat{H}f(z, t)$, where $\hat{H}$ is some differential operator corresponding to the energy. We'll return to this idea later. For now, we observe this implies:

$ \dot{\rho}(t) = \frac{i\hat{H}f(z,t)}{\partial_{z}f(z,t)} \rvert_{z=\rho(t)} $

Now since $\bar{H}$ is a differential operator, we could write it in this form:

$\hat{H} = \sum_{n} c_{n}(z)\partial_{z}^{n}$, where the $c_{n}$'s are some arbitrary expressions involving $z$ and $\partial_{z}^{n}$ is the $n^{th}$ derivative with respect to $z$.

We can then write: $\dot{\rho}(t) = i \sum_{n} c_{n}(z) \frac{\partial_{z}^{n}f(z,t)}{\partial_{z} f(z,t)} \rvert_{z=\rho(t)}$

Here's the cool thing. $\lim_{z\rightarrow \rho(t)} \frac{\partial_{z}^{n}f(z,t)}{\partial_{z} f(z,t)}$ has a simple algebraic expression. Suppose we had some polynomial: $f(z) = (z-\alpha)(z-\beta)(z-\gamma)(z-\delta)$. We've suppressed the time index for now.

$\lim_{z\rightarrow \rho} \frac{\partial_{z}^{1}f(z,t)}{\partial_{z} f(z,t)} = 1$

$\lim_{z\rightarrow \rho} \frac{\partial_{z}^{2}f(z,t)}{\partial_{z} f(z,t)} = 2! \big{(} \frac{1}{(\rho - \beta)} + \frac{1}{(\rho - \gamma)} + \frac{1}{(\rho - \delta)} \big{)} $

$\lim_{z\rightarrow \rho} \frac{\partial_{z}^{3}f(z,t)}{\partial_{z} f(z,t)} = 3! \big{(} \frac{1}{(\rho - \beta)(\rho - \gamma)} + \frac{1}{(\rho - \beta)(\rho - \delta)} + \frac{1}{(\rho - \gamma)(\rho - \delta)} \big{)} $

$\lim_{z\rightarrow \rho} \frac{\partial_{z}^{4}f(z,t)}{\partial_{z} f(z,t)} = 4! \big{(} \frac{1}{(\rho - \beta)(\rho - \gamma)(\rho - \delta)} \big{)} $

So that:

$\dot{\rho}(t) = i \sum_{n} c_{n}(\rho(t)) \tilde{\sum} \frac{n!}{(\rho(t) - \alpha(t))(\rho(t) - \beta(t))\dots(\rho(t) - \zeta_{n-1}(t))}$

Unpacking this, suppose we had three roots {$z_{0}, z_{1}, z_{2}$} and let's consider the time evolution of $z_{0}$:

$\dot{z{0}}(t) = i \Big{(} c{1}(z{0}) + c{2}(z{0}) ( \frac{2!}{(z{0} - z{1})} + \frac{2!}{(z{0} - z_{2})})

- c{3}(z{0}) ( \frac{3!}{(z{0} - z{1})(z{0} - z{2})}) \Big{)}$

For four roots:

$\dot{z_{0}}(t) = i \Big{(} c_{1}(z_{0}) + c_{2}(z_{0}) ( \frac{2!}{(z_{0} - z_{1})} + \frac{2!}{(z_{0} - z_{2})} + \frac{2!}{(z_{0} - z_{3})}) + c_{3}(z_{0}) ( \frac{3!}{(z_{0} - z_{1})(z_{0} - z_{2})} + \frac{3!}{(z_{0} - z_{1})(z_{0} - z_{3})}) + c_{4}(z_{0}) ( \frac{4!}{(z_{0} - z_{1})(z_{0} - z_{2})(z_{0} - z_{3})}) \Big{)} $

So the time evolution can be broken down into 1-body, 2-body, 3-body terms, and so on. We can see that the evolution of the roots is like the classical evolution of $2j$ particles confined to the sphere, feeling 1-body forces that depend only on their own position, 2-body forces that depend on the relative location between them and the other stars individually, 3-body forces that depend on the locations of pairs of stars, then triples, and so on. The particular Hamiltonian determines the strengths of these interactions: the $c$'s, where might be $0$ or which might have some combination of $z$'s and scalars. We'll return to how to figure this after a brief detour.

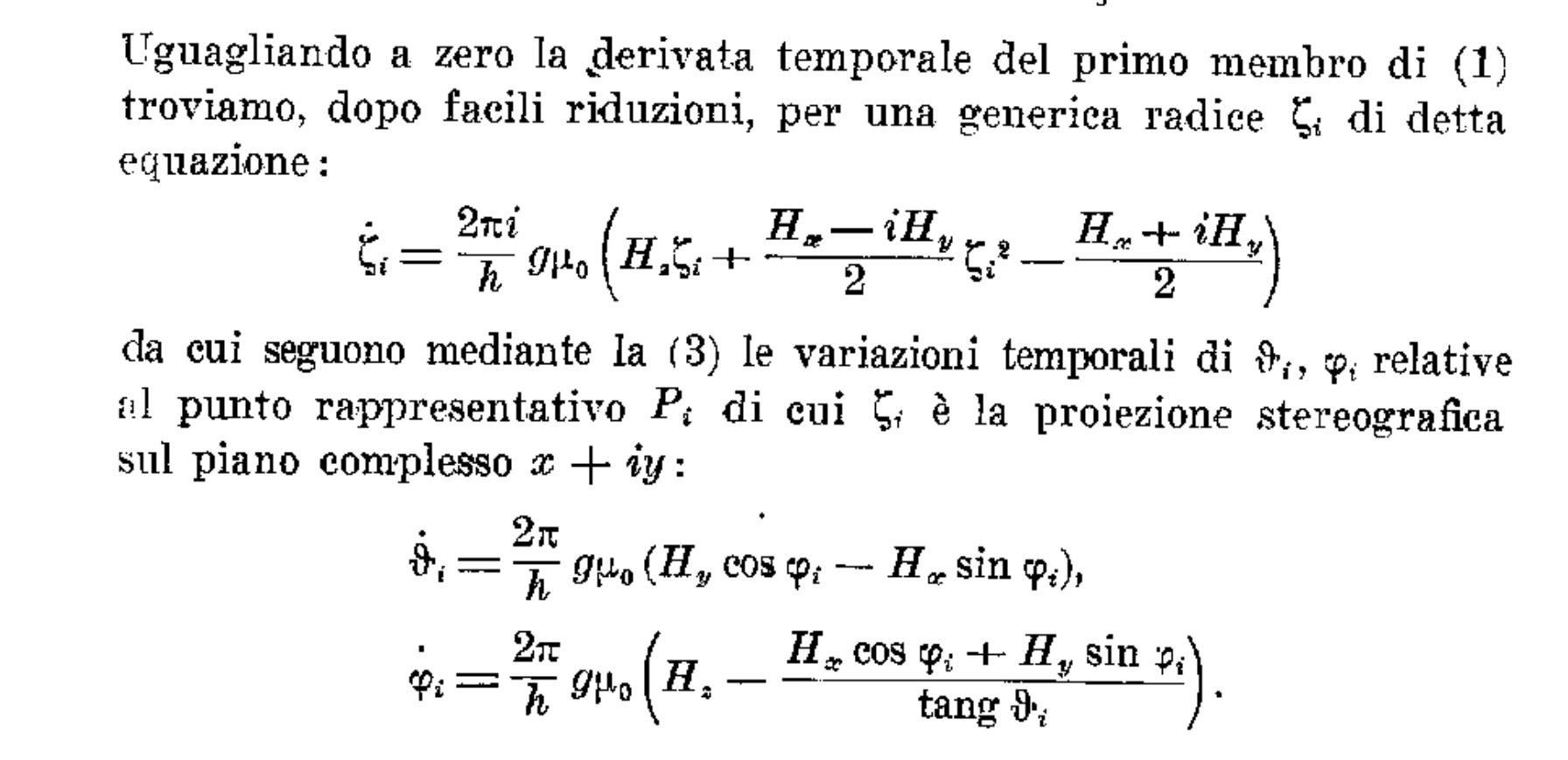

But check out the time derivative of the root in the case of a single spin-$\frac{1}{2}$ in a magnetic field, which Majorana himself found the following expression for, where $H_{x}, H_{y}, H_{z}$ is the direction of the field.

Now you might ask:

After all, if we really can represent a spin-$j$ state as $2j$ points on the sphere, why not just keep track of those $(x, y, z)$ points and not worry about all these complex vectors and polynomials, etc? Even for the spin-$\frac{1}{2}$ case, what's the use of working with a two dimensional complex vector representation anyway?

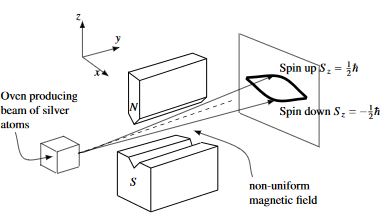

And so, now we need to talk about the Stern-Gerlach experiment.

Suppose we have a bar magnet. It has a North Pole and a South Pole, and it's oriented along some axis. Let's say you shoot it through a magnetic field that's stronger in one direction, weaker up top, stronger down below. To the extent that the magnet is aligned with or against the magnetic field, it'll be deflected up or deflected down and tilted a little bit. For a big bar magnet, it seems like it can be deflected any continuous amount, depending on the original orientation of the magnet.

But what makes a bar magnet magnetic? We know that moving charges produce a magnetic field, so we might imagine that there is something "circulating" within the bar magnet.

What happens if we start dividing the bar magnet into pieces? We divide it in two: now we have two smaller bar magnets, each with their own pole. We keep dividing. Eventually, we reach the "atoms" themselves whose electrons are spin-$\frac{1}{2}$ particles, which means they're spinning, and this generates a little magnetic field: to wit, a spin-$\frac{1}{2}$ particle is like the tiniest possible bar magnet. It turns out that what makes a big bar magnet magnetic is that all of its spins are entangled so that they're all pointing in the same direction, leading to a macroscopic magnetic field.

Okay, so what happens if we shoot a spin-$\frac{1}{2}$ particle through the magnetic field?

Here's the surprising thing. It is deflected up or down by some fixed amount, with a certain probability, and if it is deflected up, then it ends up spinning perfectly aligned with the magnetic field; and if it is deflected down, then it ends up spinning perfectly anti-aligned with the magnetic field. There's only two outcomes.

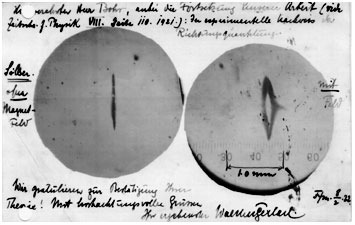

The experiment was originally done with silver atoms which are big neutral atoms with a single unpaired electron in their outer shells. In fact, here's the original photographic plate.

This was one of the crucial experiments that established quantum mechanics. It was conceived in 1921 and performed in 1922. The message is that spin angular momentum isn't "continuous" when you go to measure it; it comes in discrete quantized amounts.

To wit, suppose your magnetic field is oriented along the Z-axis. If you have a spin-$\frac{1}{2}$ state $\begin{pmatrix} a \\ b \end{pmatrix}$ represented in the Z-basis, then the probability that the spin will end up in the $\mid \uparrow \rangle$ state is $aa*$ and the probability that the spin will end up in the $\mid \downarrow \rangle$ state is given by $bb^{*}$. This is of course if we've normalized our state so that $aa^{*} + bb^{*} = 1$, which of course we always can.

What if we measured the spin along the Y-axis? Or the X-axis? Or any axis? We'd express the vector $\begin{pmatrix} a \\ b \end{pmatrix}$ in terms of the eigenstates of the Y operator or the X operator, etc. We'd get another vector $\begin{pmatrix} c \\ d \end{pmatrix}$ and $cc^{*}$ would be the probability of getting $\uparrow$ in the Y direction and $dd^{*}$ would be the probability of getting $\downarrow$ in the Y direction.

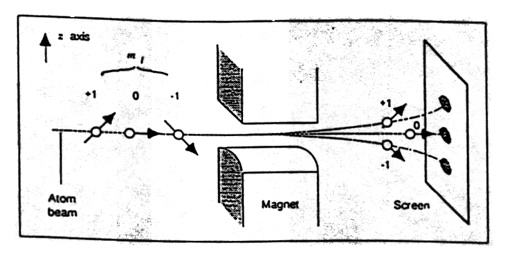

What happens if we send a spin-$1$ particle through the machine?

The particle ends up in one of three locations, and its spin will be in one of the three eigenstates of the operator in question, each with some probability. Recall the eigenstates correspond to: two points at the North pole; one point at North Pole, one point at South Pole; two points at South Pole. So if we're in the Z-basis we have some $\begin{pmatrix} a \\ b \\ c \end{pmatrix}$ and the probability of the first outcome is $aa^{*}$, the second outcome is $bb^{*}$, and the third outcome is $cc^{*}$.

And in general, a spin-$j$ particle sent through a Stern-Gerlach set-up will end up in one of $2j+1$ locations, each correlated with one of the $2j+1$ eigenstates of the spin-operator in that direction.

The idea is that a big bar magnet can be treated as practically a spin-$\infty$ particle, with an infinite number of stars at the same point. And so, it "splits into so many beams" (and is being so massively decohered as it goes along), that it seems to end up in a continuum of possible states and locations.

Okay, so let's take a moment to stop and think about the meaning of "superposition."

If we wanted to be fancy, we could describe a point on a plane $(x, y)$ as being in a "superposition" of $(1,0)$ and $(0,1)$. For example, the point $(1,1)$ which makes a diagonal line from the origin would be an equal superposition of being "horizontal" and "vertical." In other words, we can add locations up and the sum of two locations is also a location.

It's clear that we can describe a point on the plane in many different ways. We could rotate our coordinate system, use any set of two linearly independent vectors in the plane, and write out coordinates for the same point using many different frames of reference. This doesn't have anything to do with the point. The point is where it is. But we could describe it in many, many different ways as different superpositions of different basis states. Nothing weird about that. They just correspond to "different perspectives" on that point.

Another example of superposition, in fact, the ur-example, if you will, is waves. You drop a rock in the water and the water starts rippling outwards. Okay. You wait until the water is calm again, and then you drop another rock in the water at a nearby location and the water ripples outwards, etc. Now suppose you dropped both rocks in at the same time. How will the water ripple? It's just a simple sum of the first two cases. The resulting ripples will be a sum of the waves from the one rock and the waves from the other rock. In other words, you can superpose waves, and that just makes another wave. This works for waves in water, sound waves, even light waves. (And by the way, it's true for forces in Newtonian mechanics as well.)

Waves are described by differential equations. And solutions to (homogenous) differential equations have the property that a sum of two solutions is also a solution.

E.g:

$y'' + 2y' + y = 0$

$z'' + 2z' + z = 0$

$(y+z)'' + 2(y+z)' + (y+z) = y'' + z'' + 2y' + 2z' + y + z = (y'' + 2y' + y) + (z'' + 2z' + z) = 0 + 0 = 0 $

This works because taking the derivative is a linear operator! So that $(y + z)' = y' + z'$.

Let's form a general solution:

$y'' + 2y' + y = 0 \rightarrow k^{2} + 2k + 1 = 0 \rightarrow (k + 1)(k + 1) = 0 \rightarrow k = \{-1,-1\}$

So $y = c_{0}e^{-x} + c_{1}xe^{-x}$. Let's check:

$y' = -c_{0}e^{-x} + c_{1}(-xe^{-x} + e^{-x})$

$y'' = c_{0}e^{-x} + c_{1}(xe^{-x} - e^{-x} - e^{-x})$

$ y'' + 2y' + y = (c_{0}e^{-x} + c_{1}xe^{-x} - c_{1}e^{-x} - c_{1}e^{-x}) + 2(-c_{0}e^{-x} - c_{1}xe^{-x} + c_{1}e^{-x}) + (c_{0}e^{-x} + c_{1}xe^{-x}) = 2c_{0}e^{-x} - 2c_{0}e^{-x} + 2c_{1}xe^{-x} - 2c_{1}e^{-x} + 2c_{1}e^{-x} - 2c_{1}e^{-x} = 0 $

The solutions form a 2D vector space and any solution can be written as a linear combination of $e^{-x}$ and $xe^{-x}$. In other words, I can plug any scalars in for $c_{0}$ and $c_{1}$ and I'll still get a solution.

It is perhaps at first surprising that we see the same thing happen in terms of our constellations. Who knew there was this way of expressing constellations as complex linear combinations of other constellations?

We can take any two constellations (with the same number of stars) and add them together, and we always get a third constellation. Moreover, any given constellation can be expressed in a multitude of ways, as a complex linear combination of (linearly independent) constellations.

We could take one and the same constellation and express it as a complex linear superposition of X eigenstates, Y eigenstates, or the eigenstates of any Hermitian operator. The nice thing about Hermitian operators is that their eigenvectors are all orthogonal, and so we can use them as a basis.

In other words, the constellation can be described in different reference frames in different ways, as a superposition of different basis constellations.

Now the daring thing about quantum mechanics is that it says that basically: everything is just like waves. If you have one state of a physical system and another state of a physical system, then if you add them up, you get another state of the physical system. In theory, everything obeys the superposition principle.

There is something mysterious about this, but not in the way it's usually framed. Considering a spin-$\frac{1}{2}$ particle, it seems bizarre to say that its quantum state is a linear superposition of being $\uparrow$ and $\downarrow$ along the Z axis. But we've seen that it's not bizarre at all. Any point on the sphere can be written as linear superposition of $\uparrow$ and $\downarrow$ along some axis.

So it wouldn't be crazy to imagine the spin definitely just spinning around some definite axis, given by a point on the sphere. But when you go to measure it, you pick out a certain special axis: the axis you're measuring along. You express the point as a linear combination of $\uparrow$ and $\downarrow$ along that axis, and then $aa*$ and $bb*$ give you the probabilities that you get either the one or the other outcome.

In other words, the particle may be spinning totally concretely around some axis, but when you go to measure it, you just get one outcome or the other with a certain probability. You can only reconstruct what that axis must have been before you measured it by preparing lots of such particles in the same state, and measuring them all in order to empirically determine the probabilities. In fact, to nail down the state you have to calculate (given some choice of X, Y and Z) $(\langle \psi \mid X \mid \psi \rangle, \langle \psi \mid Y \mid \psi \rangle, \langle \psi \mid Z \mid \psi \rangle)$, in other words, you have to do the experiement a bunch of times measuring the (identically prepared) particles along three orthogonal axes, and get the proportions of outcomes in each of those cases, weight those probabilities by the eigenvalues of X, Y, Z, and then you'll get the $(x, y, z)$ coordinate of the spin's axis.

So yeah, we could just represent our spin-$j$ state as a set of $(x, y, z)$ points on the sphere. But it's better to use a unitary representation, a representation as a complex vector with unit norm, because then we can describe the constellation as a superposition of outcomes of an experimental situation where the components of the vector have the interpretation of probability amplitudes whose "squares" give the probabilities for those outcomes, and the number of outcomes is the dimensionality of the space.

In other words, quantum mechanics effects a radical generalization of the idea of a perspective shift or reference frame. We normally think of a reference frame as provided by three orthogonal axis in 3D, say, by my thumb, pointer finger, and middle finger splayed out. In quantum mechanics, the idea is that an experimental situation provides a reference frame in the form of a Hermitian operator--an observable--whose eigenstates provide "axes" along which we can decompose the state of a system, even as they correspond to possible outcomes to the experiment. The projection of the state onto each of these axes gives the probability (amplitude) of that outcome.

It's an amazing twist on the positivist idea of describing everything as a black box: some things go in, some things come out, and you look at the probabilities of the outcomes, and anything else one must pass over in silence. For our spin, we can see that its "state" can be fully characterized by experiemental outcomes, but with a twist: the spin state is a superposition of "possible outcomes to an experiment," which at first seems metaphysically bizarre, but in this case can be interpreted geometrically as: a perfectly concrete constellation on the sphere.

Dirac writes in his Principles of Quantum Mechanics, "[QM] requires us to assume that ... whenever the system is definitely in one state we can consider it as being partly in each of two or more other states. The original state must be regarded as the result of a kind of superposition of the two or more new states, in a way that cannot be conceived on classical ideas."

Around the same time, Schrodinger proposed his cat as a kind of reductio ad absurdum to the universal applicability of quantum ideas. He asks us to consider whether a cat can be in a superposition of $\mid alive \rangle \ + \mid dead \rangle$. Such a superposition seems quite magical if we're considering a cat. How can a cat be both alive and dead? And yet, the analogous question for a spin isn't mysterious at all. How can a spin be in a superposition of $\mid \uparrow \rangle \ + \mid \downarrow \rangle$ (in the Z basis)? Easily! It's just pointed in the X+ direction! The analogous thing for a cat would be something like $\mid alive \rangle \ + \mid dead \rangle = \mid sick? \rangle$--I don't propose that seriously, other than to emphasize that what the superposition principle is saying is that superpositions of states are honest states of the system too.

In the case of a quantum spin, we can see that we can regard the spin's constellation as being perfectly definite, but describable from many different reference frames, different linearly independent sets of constellations. Which reference frame you use is quite irrelevant to the constellation, of course, until you go to measure it, and then the spin ends up in one of the reference states with a certain probability. So the mystery isn't superposition per se; it has something to do with measurement. (You might ask how this plays out in non-spin cases, for example, superpositions of position: one clue comes when we start discussing oscillator coherent states. In any case, there may be a certain specialness about the case of quantum spin, but precisely because of that, it can help guide our thinking.)

You might ask: Doesn't the actual Hamiltonian fix a basis, the energy basis? It's quite true: one can decompose a state in the energy basis, and this is just a perspective shift, mere mathematics, as it were: but each state is evolved in time proportional to the energy eigenvalue of that basis state, and so this distinguishes them, treating them differently.